feat: Whisper prompting#22496

Conversation

|

The documentation is not available anymore as the PR was closed or merged. |

|

Hey this PR looks really good (although I'll leave the actual review to Sanchit or Arthur). I was just wondering whether it also makes sense to support the In addition, there's this PR that suggests an |

Hey Matthijs thanks, I'm happy to add what's wanted. Will look for HF guidance on that and whether it should be added here or in a follow on PR. |

There was a problem hiding this comment.

Replacing this with an actual conditional now. Any idea how the model test I added passed with this?

There was a problem hiding this comment.

I don't think this last line handles all possible datatypes of token_ids, particularly int, torch.Tensor, and ndim > 1 np narrays. Maybe we should use to_py_obj above it first?

There was a problem hiding this comment.

Maybe it's not needed to check for has_initial_prompt and simply always skip everything until the bos_token?

There was a problem hiding this comment.

Oh good idea will use the bos_token instead of the prompt start token. Regarding always skipping, do we want to show the prompt when skip_special_tokens is False like in this example or no?

output = processor.batch_decode(pred_ids, skip_special_tokens=False)

# output: ['<|startofprev|> Mr. Quilter<|startoftranscript|><|en|><|transcribe|><|notimestamps|> On the general principles of art, Mr. Quilter writes with equal lucidity.<|endoftext|>']There was a problem hiding this comment.

Looking into this it appears the bos_token is <|endoftext|> unless otherwise set, which we couldn't use for slicing

hollance

left a comment

hollance

left a comment

There was a problem hiding this comment.

Hi @connor-henderson, I was asked by @sanchit-gandhi to do a code review for your PR. It looks pretty good already, just needs a bit of fine-tuning. I'm only just getting familiar with the Whisper code myself, so my opinions don't necessarily always make sense. ;-)

There was a problem hiding this comment.

Sanchit suggested the argument name prompt_ids rather than initial_prompt_ids and I have to agree with that; the word prompt already implies that it precedes what happens.

I'm also wondering if this should be Optional[torch.Tensor] rather than List[int], just like input_ids in the HF NLP models. It feels "wrong" to use a list here (since we generally always use Tensors for tokenized input), even though it makes sense with how this variable is used. Maybe @sanchit-gandhi has an opinion about this?

There was a problem hiding this comment.

Sounds good, renamed to prompt_ids.

With regards to the type, I wonder if this is ok here because prompt_ids purpose is just to update forced_decoder_ids and decoder_start_token_id which are type int and List[List[int]] (if I'm not mistaken, saw that here)? If we used torch.Tensor, we would also have to add importing torch to the file with the get_prompt_ids function and I don't believe we'd ever require tensor functionality. Just lmk whichever you prefer

There was a problem hiding this comment.

What kind of window does this refer to? (I'm assuming what's called window here is what we call a chunk. If that's the case we should be consistent with the terminology.)

There was a problem hiding this comment.

The "first window" refers to the initial segment of the input speech, so I believe it can be used interchangeably with chunk yes. Updated the wording to use 'chunk'

There was a problem hiding this comment.

Nitpick: The forced_decoder_ids use a tuple instead of a list, so this should be a tuple too:

indexed_initial_prompt_ids = [(rank + 1, token) for rank, token in enumerate(initial_prompt_ids)]I also used rank instead of idx and token instead of id, to be consistent with tokenizer.get_decoder_prompt_ids.

There was a problem hiding this comment.

I'm also not 100% sure that generation_config.forced_decoder_ids is always filled in here. For example, if we do the following:

forced_decoder_ids = processor.get_decoder_prompt_ids(language="de", task="translate")

predicted_ids = model.generate(input_features, forced_decoder_ids=forced_decoder_ids)then generation_config.forced_decoder_ids may not have the <|de|> token at this point. In this situation, the correct forced_decoder_ids will be filled in by super().generate(...), as far as I can tell.

There was a problem hiding this comment.

Ah good catch! Yes before the implementation was overriding provided forced_decoder_ids, but this should be fixed now. Before it was adding these from the generation_config only, but now checks kwargs first.

There was a problem hiding this comment.

I prefer get_prompt_ids() as the name for this method.

Not sure that it needs to live in the tokenizer (since that involves duplicate code for the fast tokenizer). Perhaps just having it in the processor is enough?

There was a problem hiding this comment.

Cool will rename and move it to just being on the processor. My thinking was that users would also want to access the method on the tokenizers directly, but I wasn't sure.

There was a problem hiding this comment.

Perhaps this could be an instance variable.

There was a problem hiding this comment.

Now that we've updated this decode function with your above suggestion this line was removed. This same logic now is only used once and its on the processor. Would you still like me to make it an instance var there?

There was a problem hiding this comment.

If it's just used once then it's not really worth making it an instance variable.

There was a problem hiding this comment.

Maybe it's not needed to check for has_initial_prompt and simply always skip everything until the bos_token?

There was a problem hiding this comment.

What is the reason for doing " " + text.strip()?

There was a problem hiding this comment.

This is what was done in the Whisper implementation. I believe the model formatting expects white spaces will be removed from the beginning and at the end of the string, with one space added at the start

There was a problem hiding this comment.

Small typo in the function name. :-) But excellent work on adding these unit tests!

|

To-do list before re-requesting review

Added from @hollance's below two comments:

|

|

One more thing we'll need to do, is change the |

|

I looked a bit more into how this works today, and it turns out that 🤗 Transformers does things a bit differently than the original OpenAI code. OpenAI does the following: For the first 30-second chunk of audio, it passes the following token sequence to the model's decoder on the first iteration: Then for the second chunk of audio, it passes the following sequence to the decoder on the first iteration: For the next chunk, it uses And so on... This list of tokens that it passes in the (When you set the Our ASR The best we can do is send The suggested modifications to |

You are correct that when you do the following, pipe = pipeline(task="automatic-speech-recognition", model="openai/whisper-tiny")

res = pipe(samples, generate_kwargs={ "prompt_ids": prompt_ids })the pipeline will automatically pass the However, to process an audio file that is longer than 30 seconds, we have to do: res = pipe(example, generate_kwargs={ "prompt_ids": prompt_ids }, chunk_length_s=30, stride_length_s=[6, 0])Now the same To get the regular |

c9b8821 to

814860e

Compare

|

Ok the additional requested features are now added so I believe this is ready for re-review. Thank you for your comments!

I think I’m missing something here as I’ve tried this on >1 min of audio in the below example where I also added a debug line to decode the tokens inside of the pipeline as they were generated, and it appears to be properly sequential. In any case, if we don’t want this I’ll remove pipe = pipeline(task="automatic-speech-recognition", model="openai/whisper-tiny")

res = pipe(samples, generate_kwargs={ "condition_on_previous_text": True, "prompt_ids": prompt_ids })

# ['<|startofprev|><|startoftranscript|><|en|><|transcribe|><|notimestamps|> Mr. Quilter is the apostle of the middle classes, and we are glad to welcome his gospel.<|endoftext|>']

# ["<|startofprev|> Mr. Quilter is the apostle of the middle classes, and we are glad to welcome his gospel.<|startoftranscript|><|en|><|transcribe|><|notimestamps|> Nor is Mr. Quilter's manner less interesting than his matter.<|endoftext|>"]

# ["<|startofprev|> Mr. Quilter is the apostle of the middle classes, and we are glad to welcome his gospel. Nor is Mr. Quilter's manner less interesting than his matter.<|startoftranscript|><|en|><|transcribe|><|notimestamps|> He tells us that at this festive season of the year with Christmas and roast beef looming before us, similarly drawn from eating and its results occur most readily to the mind.<|endoftext|>"]

# ["<|startofprev|> Mr. Quilter is the apostle of the middle classes, and we are glad to welcome his gospel. Nor is Mr. Quilter's manner less interesting than his matter. He tells us that at this festive season of the year with Christmas and roast beef looming before us, similarly drawn from eating and its results occur most readily to the mind.<|startoftranscript|><|en|><|transcribe|><|notimestamps|> He has grave doubts whether Sir Frederick Layton's work is really Greek after all and can discover in it but little of Rocky Ithaca.<|endoftext|>"]

# ["<|startofprev|> Mr. Quilter is the apostle of the middle classes, and we are glad to welcome his gospel. Nor is Mr. Quilter's manner less interesting than his matter. He tells us that at this festive season of the year with Christmas and roast beef looming before us, similarly drawn from eating and its results occur most readily to the mind. He has grave doubts whether Sir Frederick Layton's work is really Greek after all and can discover in it but little of Rocky Ithaca.<|startoftranscript|><|en|><|transcribe|><|notimestamps|> Lennils, pictures are a sort of upguards and atom paintings and Mason's exquisite itals are as national as a jingo poem. Mr. Berkett Foster's landscapes smile at one much in the same way that Mr. Carker used to flash his teeth. And Mr. John Collier gives his sitter a cheerful slap on the back before he says like a shampoo or a turkish bath. Next man<|endoftext|>"]

# ["<|startofprev|> Mr. Quilter is the apostle of the middle classes, and we are glad to welcome his gospel. Nor is Mr. Quilter's manner less interesting than his matter. He tells us that at this festive season of the year with Christmas and roast beef looming before us, similarly drawn from eating and its results occur most readily to the mind. He has grave doubts whether Sir Frederick Layton's work is really Greek after all and can discover in it but little of Rocky Ithaca. Lennils, pictures are a sort of upguards and atom paintings and Mason's exquisite itals are as national as a jingo poem. Mr. Berkett Foster's landscapes smile at one much in the same way that Mr. Carker used to flash his teeth. And Mr. John Collier gives his sitter a cheerful slap on the back before he says like a shampoo or a turkish bath. Next man<|startoftranscript|><|en|><|transcribe|><|notimestamps|> it is obviously unnecessary for us to point out how luminous these criticisms are, how delicate and expression.<|endoftext|>"]

# ["<|startofprev|> middle classes, and we are glad to welcome his gospel. Nor is Mr. Quilter's manner less interesting than his matter. He tells us that at this festive season of the year with Christmas and roast beef looming before us, similarly drawn from eating and its results occur most readily to the mind. He has grave doubts whether Sir Frederick Layton's work is really Greek after all and can discover in it but little of Rocky Ithaca. Lennils, pictures are a sort of upguards and atom paintings and Mason's exquisite itals are as national as a jingo poem. Mr. Berkett Foster's landscapes smile at one much in the same way that Mr. Carker used to flash his teeth. And Mr. John Collier gives his sitter a cheerful slap on the back before he says like a shampoo or a turkish bath. Next man it is obviously unnecessary for us to point out how luminous these criticisms are, how delicate and expression.<|startoftranscript|><|en|><|transcribe|><|notimestamps|> On the general principles of art and Mr. Quilter writes with equal lucidity.<|endoftext|>"]

Aimed to address this with the new sequential loop over chunks of the input. Right now this way is incompatible with |

hollance

left a comment

hollance

left a comment

There was a problem hiding this comment.

Thanks for working on this feature, @connor-henderson . I think you were very inventive in coming up with a solution that allows us to use initial_prompt and condition_on_previous_text as in OpenAI. 😄

However, your implementation doesn't seem to fit in very well with the current design of Transformers. I'll let my colleagues at HF weigh in too, but it might be better to split this functionality as follows:

-

Add the prompt_ids to

model.generate()as in your earlier version of the PR. All this does is insert the prompt in the<|startofprev|>section. This doesn't give us the OpenAI functionality yet, it only adds<|startofprev|>support to the modeling and tokenizer code. -

Create a new pipeline that is specific to Whisper that works more like the OpenAI inference code does. The logic for managing the

<|startofprev|>section then sits in the new pipeline's loop, not in the model.

(Perhaps step 2 could be a separate PR, to keep the complexity of these PRs down a bit.)

There was a problem hiding this comment.

Adding a loop in model.generate() is a clever solution to get the pipeline to work sequentially, but it's also a bit hacky. I don't think it's the right approach for Transformers.

There was a problem hiding this comment.

Rather than returning the prompt_ids as a list of integers, it would be preferable to have them as a tensor. But even better, get_prompt_ids() should use the return_tensors argument just like tokenizer does, so that the caller can decide between numpy or torch tensors, or a list of integers.

|

cc'ing in @gante re |

89904f6 to

ea23379

Compare

Thanks @hollance I definitely agree splitting this into >1 PR is ideal, have pushed back up code for number 1 above so this can just address that portion. It now implicitly does |

|

Curious if by adding |

|

The reason I asked for the |

gante

left a comment

gante

left a comment

There was a problem hiding this comment.

The PR LGTM as it is, thank you for the contribution 🙌

BTW, the code changes do not match the description at the top. From what I gathered in the comments, there will be a follow-up PR, correct? In that case, would you mind updating the PR, before I tag a core maintainer for a final review? :)

There was a problem hiding this comment.

Is there a reason behind this slicing? Intuitively it makes sense to me, but I'm curious to know if there is a reference behind this choice :)

There was a problem hiding this comment.

Sure I'll leave a comment in the code too, this is done to match Whisper's implementation. I believe the reason they do the -1 is to make room for the first token to generate, and the reason they do // 2 is to halve it to share context space with a prefix if one is provided (which also gets halved). I don't believe there's prefix support yet in transformers so technically the // 2 isn't necessary at this point but I didn't want to confuse future work around that if it happens. There's a good clarification of prompt vs prefix here if it's of interest.

There was a problem hiding this comment.

Hello @connor-henderson, as I am using the prompting feature I noticed a bug for long prompts. It might be caused by the slicing, where it should be text_prompt_ids = text_prompt_ids[-(self.config.max_length // 2 - 1) :], to correctly account for the first token <|startofprev|>.

There was a problem hiding this comment.

Hey @Helene-Maxcici, feel free to open a new issue to track this bug, tagging myself (and optionally @connor-henderson). In particular, it would be super helpful to have a reproducible code snippet to emulate the behaviour locally. See the following page for details: https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#submitting-a-bug-related-issue-or-feature-request

hollance

left a comment

hollance

left a comment

There was a problem hiding this comment.

This PR is shaping up nicely, @connor-henderson! I think this PR has the right amount of changes and then we can figure out how to do the sequential generation in a follow-up PR.

I've added a bunch of remarks and suggestions so we can make this fit as well into Transformers as possible. 😄

I'd also like to invite my colleagues @sanchit-gandhi and @ArthurZucker to have a look at these changes.

There was a problem hiding this comment.

My suggestion is prompt_ids: Optional[torch.Tensor] = None but I'll let my colleagues weigh in too. @sanchit-gandhi @ArthurZucker

There was a problem hiding this comment.

Changed to this suggestion

There was a problem hiding this comment.

Nice! I think this now supports the different ways that forced_decoder_ids may be passed in?

-

Through

model.config.forced_decoder_ids = processor.get_decoder_prompt_ids(language=..., task=...) -

Through

model.generate(input_features, forced_decoder_ids=forced_decoder_ids) -

Through

model.generate(input_features, language=..., task=...)

It would be good if there are unit tests for these different methods.

There was a problem hiding this comment.

I don't believe model.generate allows passing in task or language directly as in 3. above, but I've now added tests for the other two

There was a problem hiding this comment.

It does allow that (and I think it might even be the preferred method now) but for some reason the language needs to be the token, such as "<|ja|>" rather than "ja".

There was a problem hiding this comment.

@connor-henderson update on the language code: we now support passing the language token, the language code, or the language name. See this (very recent) PR :)

(not sure if this info has gotten to you, many conversations in parallel in this PR)

There was a problem hiding this comment.

Note that the language code change was @connor-henderson's most recent PR! This forced_generation_ids logic is in-place so that the code is backward compatible with our previous way of handling the langauge/task, where we either set it in the config as config.forced_decoder_ids, or explicitly as forced_decoder_ids to the generate method (see #21965 (comment))

There was a problem hiding this comment.

haha derp, I didn't look at the author 🙈 my bad!

There was a problem hiding this comment.

Not sure I'm happy with this since token_ids is also used below in the call to super().decode(...).

There was a problem hiding this comment.

Yea I agree, moved this prompt removal code after that super().decode(...) call to _decode so this conversion isn't necessary

There was a problem hiding this comment.

Edge case: prompt_end_idx is not set when token_ids has length 1. Maybe rewrite it to this:

if skip_special_tokens and isinstance(token_ids, list):

prompt_token_id = self.convert_tokens_to_ids("<|startofprev|>")

if prompt_token_id in token_ids:

for i in range(1, len(token_ids)):

if token_ids[i] in self.all_special_ids:

token_ids = token_ids[i:]

break Although perhaps it's easiest to check if the very first token is <|startofprev|> rather than doing prompt_token_id in token_ids?

There was a problem hiding this comment.

Since this is the same logic as in the regular tokenizer, maybe we can extract it into a shared helper function?

There was a problem hiding this comment.

moved some of the functionality into a helper and left some for what I think is the right reusability/readability tradeoff, lmk if you think more should be abstracted

There was a problem hiding this comment.

I can understand putting this into a free function but if it's only used in one class, we generally keep it as a member function.

There was a problem hiding this comment.

for sure, removed this change. i'd been moving the tests around and at one point I had two classes using this

There was a problem hiding this comment.

Nice test! I'd like to see a few more tests where you also change the forced_decoder_ids (see my comment above). The way the forced_decoder_ids get passed around is a bit brittle (due to the code for that changing a few times) and so we should make sure we have solid tests, since it's too easy for someone to change how this works and inadvertently break something.

There was a problem hiding this comment.

Could you also add some tests for edge cases?

For example: processor.get_prompt_ids("") or processor.get_prompt_ids("<|startofprev|> Mr. <|startofprev|> Quilter")

There was a problem hiding this comment.

The second will definitely confuse the model and decoding if they were passed to the current get_prompt_ids as is, would you prefer we strip the prompt start token or raise an error that it was included? I'll push up a change that strips it for now, lmk which you prefer and if you'd want to log a warning as well

There was a problem hiding this comment.

I don't really know what would be the best approach here, was just trying to think of things that might go wrong. ;-)

Perhaps raising an error on unexpected input is the best choice, but only if it doesn't add a lot of complexity to the code.

There was a problem hiding this comment.

Looks like they have their tiktoken package handle it and it raises an error if any special token is included, so will look to do the same

There was a problem hiding this comment.

I feel like this is most readable / simplest as one test with comments clarifying the cases, lmk if you want them split into separate unit tests

There was a problem hiding this comment.

I agree it's very readable but there's a potential issue: the model will keep state around, i.e. it will change the model.generation_config object with the new forced_decoder_ids and this may affect the next test. So I think it's better to instantiate a new model before each test.

Maybe it's also a good idea to test what happens after you do the following, just to make sure the code can handle both of these things being None:

model.config.forced_decoder_ids = None

model.generation_config.forced_decoder_ids = NoneThere was a problem hiding this comment.

Added a case for the above which involved a change in generate I'll call out below. I was aiming to order the tests to prevent conflicting state issues but you're right they're more brittle that way, split them into individual tests

There was a problem hiding this comment.

Sorry, I wasn't able to fully understand the last comment - for testing the case when:

model.config.forced_decoder_ids = None

model.generation_config.forced_decoder_ids = None

is this tested?

There was a problem hiding this comment.

Sorry, moving parts, we had a test explicitly for this when there we 5 test cases. Then we trimmed them, per this #22496 (comment) I changed the test_generate_with_prompt_ids_and_no_non_prompt_forced_decoder_ids test to use whisper-base.en and return_timestampt=True. I just tested it tho and realized that combination didn't actually set those attributes to None, so I updated the test to explicity set those two to None.

tl;dr it was tested, then wasn't, now is again

There was a problem hiding this comment.

went back to using 'in' check here instead of indexing to 0 in token_ids since it errors if empty

There was a problem hiding this comment.

The reason I suggested putting it inside a separate if skip_special_tokens: is that the in operation needs to scan through the list, which is slow, and we can avoid it if skip_special_tokens is False.

There was a problem hiding this comment.

good point i'll adapt it to check the index 0

hollance

left a comment

hollance

left a comment

There was a problem hiding this comment.

We're slowly getting there. 😄 (The reason I'm being so nitpicky is that we're going to be changing a model that's already being used a lot, so we have to be very careful to make the right decisions.)

There was a problem hiding this comment.

Doc comment still refers to the old type hints.

There was a problem hiding this comment.

Would it be better to strip out all special tokens / raise an error?

Not sure what OpenAI does here. In "condition on previous text mode" they don't include the <|startoftranscript|><|en|><|transcribe|><|notimestamps|> tokens when they put the previous text in the <|startofprev|> section (since that would be problematic). But I'm not sure if they also strip out the actual timestamps such as <|1.5|> etc, that would be worth looking into.

I think get_prompt_ids should not accept any tokens >= processor.tokenizer.eos_token, so we should either strip these out or raise an error. If we do want to allow timestamp tokens, then it should accept tokens > processor.tokenizer.all_special_ids[-1], since that's where the timestamp tokens begin.

There was a problem hiding this comment.

Looks like they have their tiktoken package handle it and it raises an error if any special token is included, so will look to do the same

There was a problem hiding this comment.

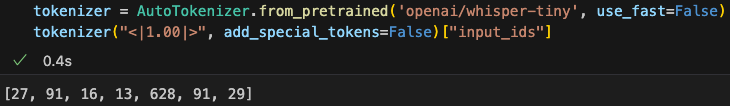

These are the tokens they raise an error on, so timestamps are included. transformers uses the same time_precision of 0.02, but notably even tho <|1.00|> is caught by OpenAI's special tokens check any number that doesn't have hundreths place precision like <|1.0|> isn't. Opted to implement catching any positive decimal number inside the special token brackets

There was a problem hiding this comment.

Your solution is probably fine but a simpler approach would be to make sure no token has a value >= processor.tokenizer.eos_token. ;-)

There was a problem hiding this comment.

I guess our tokenizer only decodes timestamp tokens, but doesn't know how to encode them?

Timestamps start at 50364. Any token id higher than that is a timestamp token.

There was a problem hiding this comment.

The reason I suggested putting it inside a separate if skip_special_tokens: is that the in operation needs to scan through the list, which is slow, and we can avoid it if skip_special_tokens is False.

There was a problem hiding this comment.

Same here. I'd prefer to do the has_prompt check after checking for skip_special_tokens. (It's only a small thing and skip_special_tokens will be True most of the time anyway, so consider this a nitpick. ;-) )

There was a problem hiding this comment.

I agree it's very readable but there's a potential issue: the model will keep state around, i.e. it will change the model.generation_config object with the new forced_decoder_ids and this may affect the next test. So I think it's better to instantiate a new model before each test.

Maybe it's also a good idea to test what happens after you do the following, just to make sure the code can handle both of these things being None:

model.config.forced_decoder_ids = None

model.generation_config.forced_decoder_ids = None20ecd4a to

7eb30f8

Compare

There was a problem hiding this comment.

This is done solely for handling the case where prompt_ids are passed in but the generation config and model config's forced decoder ids are both None. Its essentially just changing the order of operations so that we can cleanly check forced_decoder_ids is None and prompt_ids is not None to then add non-prompt forced decoder ids, none of the other functionality should change

|

@AvivSham Thanks for reporting and @connor-henderson thanks for investigating! I think we're good to merge 👍 |

|

Thank you so much for adding this! I've found that I occasionally get the following: My workaround is to catch the exception and try again without the prompt_ids. |

|

Do you have a reproducible example for this @dgram0? That seems like a serious enough bug that needs investigating further. |

Sounds like an interesting idea. Would you mind opening a new issue for this? Thanks! |

|

To get prompting working with fine-tuning, we probably don't want to explicitly add 'prompted' examples per-se, but rather split longer examples up into shorter ones and feed them sequentially through the model, providing previous passages as 'context' to the model. For example, if we had a training sample that looked like: Currently what we do is feed it to the model all at once: What we can do is feed the first sentence in: Then the second sentence, with the first sentence as context: And then the third, with both the first and second sentences as context: At inference time, we then just provide the "context" as our prompts: See section 2.3 of the Whisper paper for an in-depth explanation as to how they achieve this during pre-training. We essentially want to do the same for fine-tuning. For this to work, ideally we need an original sentence that is >> 30s in duration. That way when we split it up, we don't have super short examples that we feed to the model. |

I'll try reproducing in a small toy example. It's reproducible on my side with the fine-tuned large private model I've been working with. |

The following triggers the bug on the 13th iterations of the loop. (Usually, it takes a lot more iterations.) |

|

@dgram0 thanks for sharing, I was able to repro this. As far as its relation to prompting I think this is another case of prompt sensitivity as opposed to a bug, but it may still be of interest with regards to Whisper generally since its the same error message as issue #22682. I noticed that joining the prompts by |

|

@connor-henderson It's a bit of a contrived example meant just to recreate the issue without having to loop too much and at the same time show what may be considered a normal use case. Even without it predicting non-English characters or words you'll eventually encounter the issue within a few hundred loops. |

The following still joins the prompts using |

|

Thanks, @dgram0. Would you have time to look at this bug @connor-henderson, since you're most familiar with this code? If not, I can have a look. EDIT: LOL, I'm way too slow. Should probably refresh my browser before commenting. Thanks for making these new issues, Connor. 😄 |

|

@connor-henderson @sanchit-gandhi Hey, did we ever resolve the If I do the following, pipe = pipeline(task="automatic-speech-recognition", model="openai/whisper-tiny")

prompt_ids = pipe.tokenizer.get_prompt_ids("Hello, world!", return_tensors="pt")I get the error, TypeError: _batch_encode_plus() got an unexpected keyword argument 'add_prefix_space'It works fine if I create a I seem to recall this issue came up before but not sure if anything was decided for it? |

* initial working additions * clean and rename, add cond stripping initial prompt to decode * cleanup, edit create_initial_prompt_ids, add tests * repo consistency, flip order of conditional * fix error, move the processor fn to the tokenizer * repo consistency, update test ids to corresponding tokenizer * use convert_tokens_to_ids not get_vocab... * use actual conditional in generate * make sytle * initial address comments * initial working add new params to pipeline * first draft of sequential generation for condition_on_previous_text * add/update tests, make compatible with timestamps * make compatible with diff. input kwargs and max length * add None check * add temperature check * flip temp check operand * refocusing to prev pr scope * remove the params too * make style * edits, move max length incorporating prompt to whisper * address comments * remove asr pipeline prompt decoding, fix indexing * address comments (more tests, validate prompt) * un-comment out tests (from debug) * remove old comment * address comments * fix typo * remove timestamp token from test * make style * cleanup * copy method to fast tokenizer, set max_new_tokens for test * prompt_ids type just pt * address Amy's comments * make style

* initial working additions * clean and rename, add cond stripping initial prompt to decode * cleanup, edit create_initial_prompt_ids, add tests * repo consistency, flip order of conditional * fix error, move the processor fn to the tokenizer * repo consistency, update test ids to corresponding tokenizer * use convert_tokens_to_ids not get_vocab... * use actual conditional in generate * make sytle * initial address comments * initial working add new params to pipeline * first draft of sequential generation for condition_on_previous_text * add/update tests, make compatible with timestamps * make compatible with diff. input kwargs and max length * add None check * add temperature check * flip temp check operand * refocusing to prev pr scope * remove the params too * make style * edits, move max length incorporating prompt to whisper * address comments * remove asr pipeline prompt decoding, fix indexing * address comments (more tests, validate prompt) * un-comment out tests (from debug) * remove old comment * address comments * fix typo * remove timestamp token from test * make style * cleanup * copy method to fast tokenizer, set max_new_tokens for test * prompt_ids type just pt * address Amy's comments * make style

| forced_decoder_ids = [(1, 6), (2, 7), (3, 8)] | ||

|

|

||

| output = model.generate( | ||

| input_features, max_new_tokens=5, forced_decoder_ids=forced_decoder_ids, prompt_ids=prompt_ids |

There was a problem hiding this comment.

Why do we allow passing prompt_ids as a numpy array here?

| task=None, | ||

| language=None, | ||

| is_multilingual=None, | ||

| prompt_ids: Optional[torch.Tensor] = None, |

There was a problem hiding this comment.

I think prompt_ids should not be allowed to be a numpy array given its signature (see: https://github.com/huggingface/transformers/pull/22496/files#r1467369773)

* initial working additions * clean and rename, add cond stripping initial prompt to decode * cleanup, edit create_initial_prompt_ids, add tests * repo consistency, flip order of conditional * fix error, move the processor fn to the tokenizer * repo consistency, update test ids to corresponding tokenizer * use convert_tokens_to_ids not get_vocab... * use actual conditional in generate * make sytle * initial address comments * initial working add new params to pipeline * first draft of sequential generation for condition_on_previous_text * add/update tests, make compatible with timestamps * make compatible with diff. input kwargs and max length * add None check * add temperature check * flip temp check operand * refocusing to prev pr scope * remove the params too * make style * edits, move max length incorporating prompt to whisper * address comments * remove asr pipeline prompt decoding, fix indexing * address comments (more tests, validate prompt) * un-comment out tests (from debug) * remove old comment * address comments * fix typo * remove timestamp token from test * make style * cleanup * copy method to fast tokenizer, set max_new_tokens for test * prompt_ids type just pt * address Amy's comments * make style

What does this PR do?

Closes #22395, thank you @sanchit-gandhi for the descriptive ask!

Note: due to initial scope expansion the commit history includes initial work towards

condition_on_previous_text,always_use_initial_prompt, and pipeline integration, but these efforts have been pushed to a later PRThis this pull request adds 3 new functionalities + tests to support initial prompting functionality within Whisper's

model.generate()andtokenizer:prompt_idsparam formodel.generate():model.generate()get_prompt_idsProcessor method to create initial prompt ids to pass to generate from a passed in stringskip_special_tokens=TrueExample new API usage:

Before submitting

Pull Request section?

to it if that's the case.

documentation guidelines, and

here are tips on formatting docstrings. Haven't added anywhere outside of documenting the new generate() arg directly on the function

Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

@sanchit-gandhi